^ Sorry guys, you may have notice the blog post date is wrong. I won't change the URL, but thanks to how time works, this will be fixed in one month anyway :-D

ReactEurope conference was to me incredibly inspiring and promising. Yersterday got tons of news and tweets from JavaScript community.

One tweet and blog post by the great @aerotwist got my attention.

I often hear claims that “the DOM is slow!” and “React is fast!”, so I decided to put that to the test:

https://t.co/M1RZZiyVT2

🐢vs🐇

— Paul Lewis (@aerotwist) 3 Juillet 2015I would like to express here my opinion and feedback on using React.

I've been using React for almost 2 years now, and always in performance intensive use-cases, from Games to WebGL.

I've created glsl.io and I'm working on Diaporama Maker. Both applications are built with React and combined use of HTML, SVG, WebGL.

Diaporama Maker is probably the most ambitious piece of software I've ever personally done.

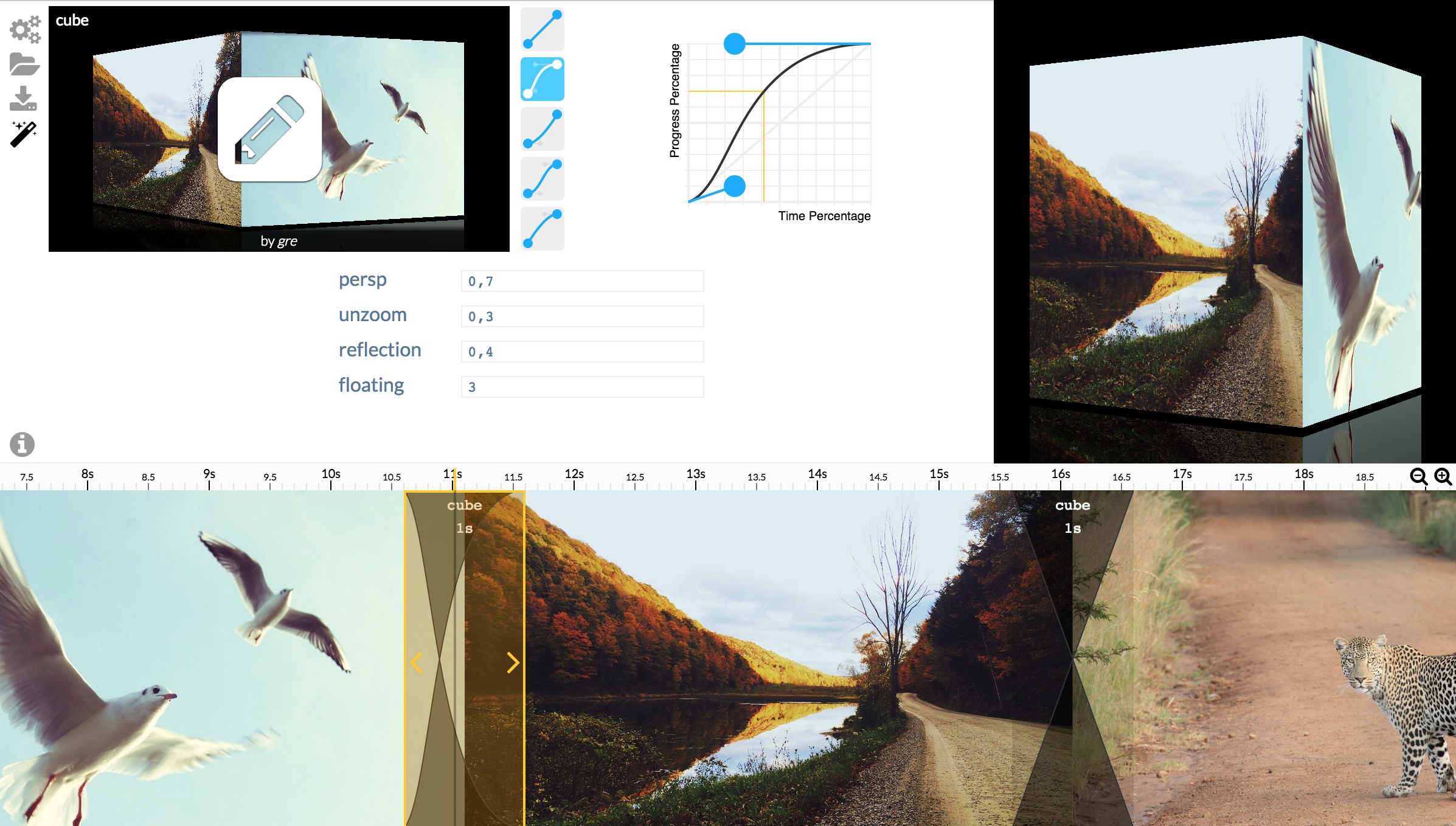

In short, Diaporama Maker it is a WYSIWYG editor for web slideshow (mainly photo slideshows). It is a bit like iMovie with the web as first target. The project is entirely open-sourced.

Currently, I am able to render the whole application at 60 FPS and this is still unexpected and surprising to me (press Space to run the diaporama on diaporama.glsl.io demo). Well, more exactly, this would not have been possible without some optimizations that I'm going to detail a bit at the end of this article.

The point is productivity

I don't think Virtual DOM claims to be faster than doing Vanilla DOM, and that's not really the point. The point is productivity. You can write very well optimized code in Vanilla DOM but this might require a lot of expertise and a lot of time even for an experienced team (time that should be spent focusing on making your product).

When it comes to adding new features and refactoring old ones, this goes worse. Without a well constrained framework or paradigm, things does not scale far, are time consuming and introduce bugs,... Especially in a team where multiple developers have to work with each other.

See Also: Why does React scale? by @vjeux.

What matters to me

There is a lot of advantages of using Virtual DOM approach before talking about React performances.

Of course, this always depends on what you are building, but I would claim that there is a long way to go using React before experiencing performance issues, and in the worse cases: you can almost always find easy solutions to optimize these performance issues.

DX

React has an incredible Developer eXperience (that people seem to call DX nowadays!) that can help you improving UX and the ability to measure Performances and optimize them when you reach bottlenecks.

You can easily figure out which component is a bottleneck in the Component tree as shown in following screenshot.

With printWasted() you can see how much time React has wasted to

render()something that didn't change and how much instances has been created. (there is also printInclusive and printExclusive)

This is a bit equivalent of the Web Console Profiler except it emphasis on your application components which is a very relevant approach.

React data flow

I can't imagine re-writing Diaporama Maker in Vanilla DOM.

In Diaporama Maker, I have a lot of cross dependencies between components,

for instance the current time is shared and used everywhere in the application.

As a matter of fact, dependencies grow when adding more and more features.

usages of time in 3 independent components.

The descriptive Virtual DOM approach very simply solves this problem. You just have to pass props in to share data between components: there is one source of trust that climb down your component tree via "props".

With Virtual DOM approach, the cost to add one new dependency to a shared data is small and does not become more complex as the application grows.

another more complex showcase of shared states.

Using an Event System like you would do in standard Backbone approach tends to lead to imperative style and spaghetti codes (and when using global events, components are not really reusable).

Moreover, I think that Views<->Models Event System approach, if not carefully used, tends to converge to an unmaintainable and laggy applications.

React is a Component library

React truly offers component as first-class citizen. This means it allows component reusability. I've tried alternative like virtual-dom and I don't think it emphasizes enough on this benefit.

There are important good practices when using React like minimizing states and props and I'm not going to expand more on this subject. Most of these best practices are not exclusive to React but come from common sense and software architecture in general. One of the important point for performance is to choose a good granularity of your component tree. It is generally a good idea to split up a component into pieces as small as possible because it allows to separate concerns, minimize props and consequently optimize rendering diff.

Diaporama Maker architecture

You would be surprised to know that Diaporama Maker does not even use Flux (that might be reconsidered soon for collaborative features). I've just taken the old "callback as props" approach all the way down the component tree. That easily makes all components purely modular and re-usable (no dependencies on some Stores).

I've also taken the inline style approach without actually using any framework (this is just about props-passing style objects).

As a consequence, I've been able to externalize a lot of tiny components that are part of my application so I can share them across apps and also in order to people to re-use them.

What is important about externalizing components is also the ability to test and optimize them independently (the whole idea of modularity).

Here are all the standalone UI components used by Diaporama Maker:

- bezier-easing-editor

- bezier-easing-picker

- diaporama-react

- glsl-transition-vignette

- glsl-transition-vignette-grid

- glsl-uniforms-editor

- kenburns-editor

(each one have standalone demos)

Optimizing performances

I've been working on crazy projects using React (like http://t.co/U2oETh5lhZ ). most performance issues i've met was not because of React

— Gaëtan Renaudeau (@greweb) 4 Juillet 2015Here are 2 examples of optimizations I had to do in Diaporama Maker that are not because of React:

- It is easy to write not very optimized WebGL, so I work a lot to optimize the pipeline of Diaporama engine

- CSS transforms defined on Library images was for a time very intensive for the browser to render so I am now using server-resized "thumbnails" instead of the full-size images. Asking the browser to recompute the

transform: scale(...)of 50 high resolution images can be super costy. (without this optimization, the resize of the application was running at like 2-3 FPS because the library thumbnails need to recompute their scale and crop).

But what if you still have performance issue due by React? Yes this can happens.

Timeline Grid example

In Diaporama Maker, I have a Component that generates a lot of elements (like 1300 elements for a 2 minutes slideshow) and my first naive implementation was very slow. This component is TimelineGrid which renders the timescale in the timeline. It is implemented with SVG and a lot of <text> and <line>.

The performance issue was noticeable when drag and dropping items across the application. React was forced to render() and compare the whole timescale grid every time. But the timescale does not change! it just have 3 props:

<TimelineGrid timeScale={timeScale} width={gridWidth} height={gridHeight} />

So it was very easy to optimize it just by using the PureRenderMixin to say to react that all my props are immutable.

(I could have implemented shouldComponentUpdate too).

After this step, and for this precise example, I don't think a Vanilla DOM implementation can reach better performance:

- when one of the grid parameter change, EVERYTHING need to be recomputed because all scales are changing.

- React is doing even smarter thing that I would not manually do? Like reusing elements instead of destroying/creating them.

There might still be ways to go more far in optimizing this example. For instance I could chunk my grid into pieces and only render the pieces that are visible, like in an infinite scroll system (I could use something like sliding-window for this). That would probably be premature optimization for this example.

Wrap Up

To my mind, generic benchmarks always tends to be biased and does not represent use-cases reality unless you are really covering your application itself.

The TimelineGrid component optimization explained in this article is a very specific and well chosen example, but it is one counter-example for such a benchmark.

Each application has its own needs and constraints and we can't really generalize one way to go. Also Performance should not be the main concern to choose a technology.

It is easy to make Virtual DOM library benchmarks, comparing the performance of rendering and Array diffing, but does that covers 80% of use-cases? Is performance really the point? What tradeoff do you accept to make between Performance and Productivity?

Tell me what you think.

In the meantime, I think we can all continue getting applications done and developing amazing DX.