Here is Beez, a web real-time audio experiment using smartphones as synthesizer effect controllers.

This is our second Web Audio API experiment made in one Hackday at Zenexity (now Zengularity).

This time, we were much more focused on having the best latency performance: we used the bleeding-edge WebRTC technology, which allows you to link clients in Peer-to-Peer instead of a classical Client-Server architecture.

- The project on Github

- Test it now! (Chrome)

Live demo of the Hackday application

Bonus for the one who recognizes the melody :-)

The Experiment

The experiment consists in controlling an audio stream running on a desktop web page with some audio effect pads running on phones via a mobile web interface. Our main goal was to make the best real-time experience.

Hive and Bees

An Hive is controlled by different Bees, eventhing connected in Peer-to-Peer (via WebRTC).

The Hive

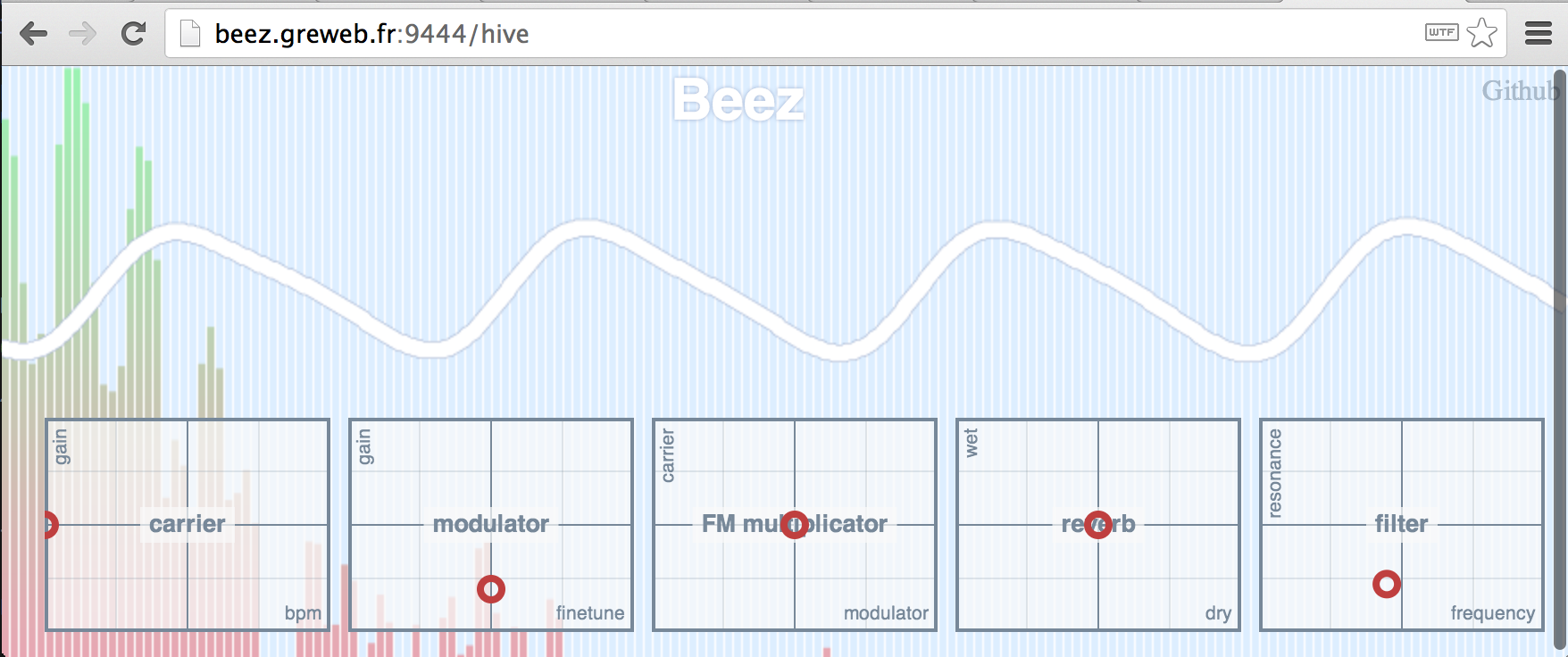

The Hive is a web page where the sound is generated and visualized (Web Audio API). It also shows you in real-time the different effects XY pads and allows you to control them.

A Bee

The Bee is a mobile web page which allows you to control the different sound effects with XY pads. It only works on Android Chrome now (WebRTC required).

Audio tech

We used Web Audio API for generating the sound client-side on the Hive:

We have a note sequencer which plays a famous melody through a Frequency Modulator and different other effects. Some controls allow you to change the BPM, gain of the carrier and the modulator, finetune, frequency multiplicator (0.25, 0.5, 1, 1.5, 2) of the carrier and the modulator, reverbation, filter (frequency and resonance). There is also a delay effect made on both left and right channels to produce a cool stereo effect.

That's quite basic audio stuff so far, I can't wait to experiment deeper and try to generate more complex sounds with that awesome Audio API. Again, our main goal was to make a P2P connection between the hive and its bees.

Network architecture

**Every bee are connected to the hive with a bi-directionnal Peer-to-Peer connection thanks to [WebRTC][webrtc].**

**Every bee are connected to the hive with a bi-directionnal Peer-to-Peer connection thanks to [WebRTC][webrtc].**

Everytime a (bee) user moves an effect controller with his phone, a position event is sent to the hive.

Basically:

xyAxis.on("change:x change:y", function () {

hive.send(["tabxy", this.get("tab"), this.get("x"), this.get("y")]);

});

As you can see, we used Backbone.js models for events.

However, A lot of Touch events per second can be triggered by an Android device, and it may depends on the device speed. We shouldn't send to the network all of these events because it can saturate it and cause some lags. To avoid that we need to throttle the touch events before sending the event to the network.

This is done transparently with the _.throttle function and we choose to throttle by 50 milliseconds which is about 20 events per second which is ok for human eye.

xyAxis.on(

"change:x change:y",

_.throttle(function () {

hive.send(["tabxy", this.get("tab"), this.get("x"), this.get("y")]);

}, 50)

);

We use different other events:

- A bee can send

"tabxychanging"and"tabopen"respectively to informs the cursor has been pressed/released and to inform a new tab has been opened. - When a Hive receive a

"tabopen", it will send back to the bee a"tabxy"event in order to inform what is the current value of that tab so we can init the cursor to the current xy axis position on the bee interface.

WebSockets vs WebRTC

Most "real-time" web experiments you see on the Internet today use WebSockets. WebSockets are good, it's a significant evolution from the Ajax years.

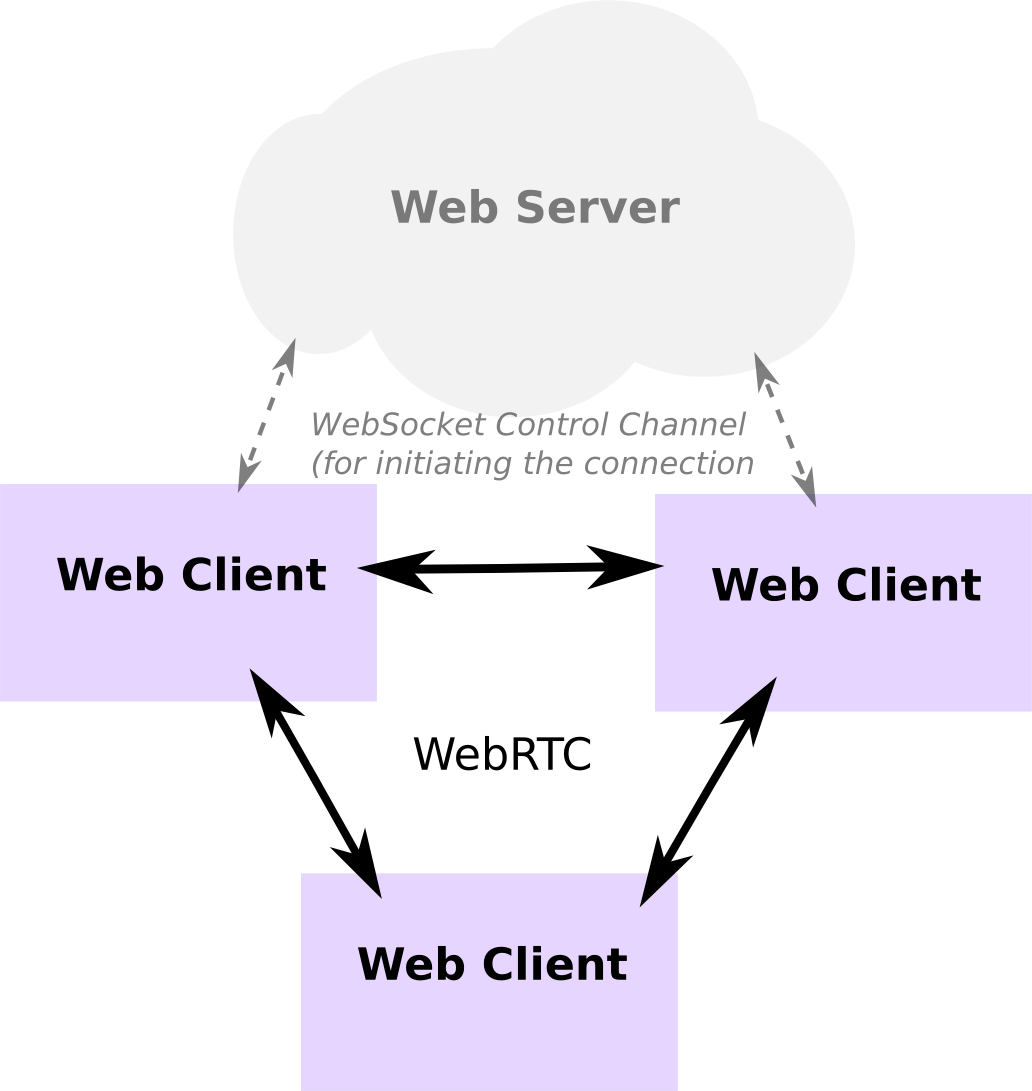

WebSocket is a protocol on top of TCP, which links a browser with a server in a bidirectional text communication. Getting 2 clients to communicate generally consists in broadcasting messages from the server to all clients (see the schema).

This architecture has some advantages:

- Simple to understand, Easy to use.

- We can easily implement some server validation.

But also has some drawbacks:

- Not always easy to traverse proxies. (e.g. through an nginx front server)

- Only text communication.

- bandwith intensive. (all the bandwidth goes back and forth with your server)

- CPU intensive. (e.g. receiving 20 messages per second from 10 clients can be a lot for a small server, especially if you are doing some message processing)

(The last two "cons" are scalability issues)

WebRTC (Web Real Time Communication), is a new web technology which helps to connect browsers in a Peer to Peer way.

WebRTC has been designed for transfering binary data like files, audio, video (e.g. a webcam stream). Of-course, we can still use it for text.

Unlike WebSockets, multiple steps are required to establish a P2P connection between two web clients. It is due to the fact that the two clients must resolve the closest network path to communicate with each other. That resolving phase requires a communication between the clients, and for that we can use WebSockets as a "Control Channel".

But once the two web clients are connected, they basically don't need the web server anymore and can communicate directly together. If the two clients are in the same local network, they should directly communicate through that local network. That reduces the server load and should significantly decrease the latency.

Playframework / Akka Actors

Playframework has been used on the server side for making that WebSocket Control Channel, and akka was convenient for handling peer communication and rooms management.

About the melody

You still didn't guess where does the melody came from?

Well, here is the answer:

Awesome team!

Finally I want to thanks my team-mates: @mrspeaker, @etaty, @NicuPrinFum, @drfars, @srenaultcontact with who we were able to make that demo from scratch in one day!